Execution environment

Aiming to create a planetary scale supercomputer requires the intensive participation of as many infrastructure providers as possible. Supporting the implementation of such scalability and openness requires a new approach toward security, data privacy, and execution models. Isolation can be achieved through the use of virtual machines or containers [65]. Virtual machines ensure a higher degree of isolation and security [66] by emulating the entire hardware, but with a more significant execution overhead. Containers [67], [68] are able to reduce this overhead through the use of the underlying host operating system’s kernel, at the cost of sacrificing some isolation in the process. The security level provided by the traditional isolation approach operates on the premise that the host envi- ronment is trusted. Given the decentralized nature and the open approach towards infrastructure providers, it is possible that certain actors may act maliciously. The conventional isolation mechanisms need to be amended with new security policies and mechanisms. The designed system must secure protection at multiple levels:

- at the service consumer level, it must guarantee data privacy and provide accurate results in a fine time frame;

- at the infrastructure provider level, it must protect the execution environment against malicious code;

- at the AI innovator and data provider level, it must secure intellectual property and protect against unauthorized access.

A. Trusted Execution Environment

To achieve all the security requirements presented above, the Openfabric execution container utilizes the SGX enclave mechanism. Intel’s Software Guard Extensions (SGX) [69] is a mechanism which ensures an application’s confidentiality and honesty, even if the OS, hypervisor, or BIOS are compro- mised. The SGX mechanism even protects against attackers that are able to physically access the machine. The primary SGX concept is the enclave [70], a fully-isolated execution environment in terms of process space and virtual memory. The enclave memory containing the application code and data does not leave the CPU package unencrypted. When the memory content is loaded into the cache, a specialized hardware mechanism decrypts the content, and checks cache integrity and the virtual-to-physical memory mapping. Upon startup, SGX performs a cryptographic check on the integrity of the enclave, and provides attestation to remote systems or other enclaves. [71], [72]. SGX provides two major benefits:

- the remote system cannot modify the program that is executed in the enclave;

- the code being executed is in plain text only inside the enclave, and it stays encrypted anywhere else.

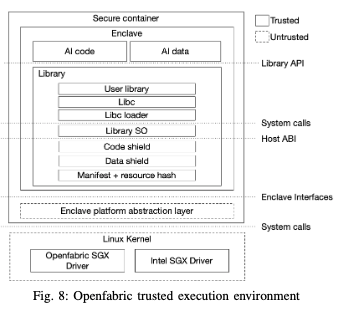

The proposed solution protects the execution environment against attack vectors or unauthorized access that may arise from the host machine (it ensures that the infrastructure provider cannot view/alter user data or the AI code at runtime). Inspired by [71], [73] and [74], the Openfabric trusted execution environment (TEE) architecture combines a secure Docker [75] container with SGX enclave mechanisms. The Openfabric TEE architecture is portrayed in Fig. 8

Fig. 8: Openfabric trusted execution environment

At the operating system level, specialized kernel drivers ensure SGX integration. The enclave abstraction layer intermediates a secure conversation between the enclave and the system. Inside the enclave, the AI application code and data is secured by the use of the built-in SGX hardware encryption mechanisms. The library stack includes an OS shield, a set of OS libraries, libc, and other user binaries. It provides mechanisms that allow access to the standard library, operating system calls, and dynamic library loading. The environment utilizes a manifest file describing the type of resources that are required by the AI application to run (declining the execution of the application with a questionable manifest). The manifest can also specify the hash ( SHA-256) of trusted files and directories accessible from the enclave.

B. Secure Execution Flow

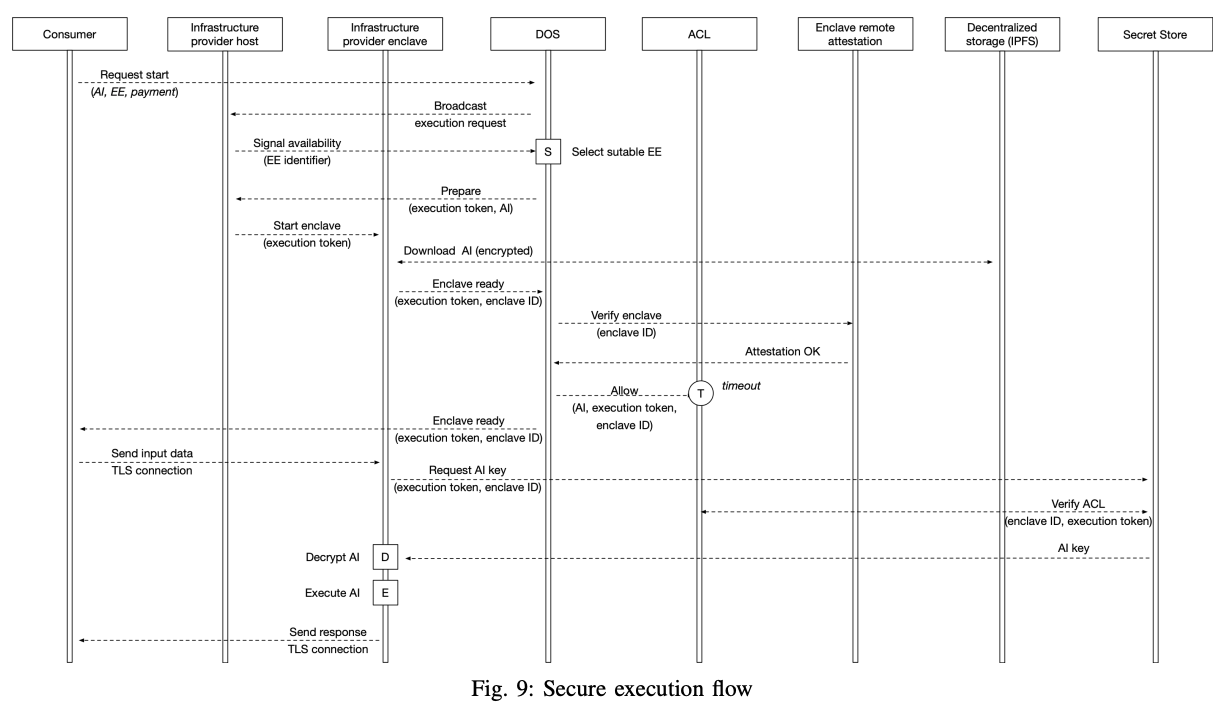

As depicted in Fig. 9, the trusted execution is ensured by the interaction between a set of system components, processes, and actors, as follows:

- The consumer initiates the process by submitting an execution request specifying the AI to run, and the hardware requirements to run it;

- The DOS broadcasts the request toward available infrastructure providers and performs the selection matching the request;

- After the infrastructure provider is selected, it starts the process of bootstrapping the Enclave;

- Inside the Enclave, the encrypted AI binary is downloaded from the decentralized storage (e.g. IPFS [47]);

- Once the Enclave is ready, it connects to the DOS;

- The DOS checks the authenticity of the Enclave through remote attestation [76], [77];

- If attestation has succeeded, the DOS will add a timebound ACL (access-control list) entry, enabling the Enclave to run the specified AI;

- The consumer will receive the Enclave connection details;

- Through a secure TLS connection, the consumer sends the input data to Enclave;

- The Enclave requests the key required to decrypt the AI binary from the Secret Store;

- If the Enclave ACL entry is valid, the Secret Store sends the key;

- With the received key, the Enclave decrypts and executes the AI binary;

- The process ends after the response is sent to the consumer.

The presented design guarantees that all security requirements are met. Keeping the AI binary accessible only inside the attested Enclave limits any unauthorized access, while also preserving the innovator’s intellectual property. The TLS communication channel between Enclave and consumer assures that data privacy is kept.

Fig.9 - Secure execution flow