State of the art and beyond

A. Centralized Approaches

Centralized AI platforms are dominating the current landscape. They accommodate users with the tools required to build intelligent applications. By combining smart decisionmaking algorithms with data, they enable developers to create advanced solutions. Some platforms offer pre-built algorithms and simple workflows, allowing for drag-and-drop modelling and visual interfaces, as compared to others that require indepth knowledge of software development and coding.

IBM Watson

is based on cognitive computing technology, and was created to support AI-driven research and development for enterprise products [13]. It integrates everything from big data manipulators to data analytics and infrastructure configurations. Through its series of tools, the platform is tailored for large corporations and data-intensive applications [14]. IBM Watson operates in a classic service infrastructure, where clients pay for all operations directly to a service provider which allocates resources and controls the flow.

Google AI

brings with it the most comprehensive suite of tools, and offers an end-to-end cycle (from data ingestion to deployment) for building AI applications [15]. Aside from its smooth integration with all other Google services and tools, this platform also favors the usage of Kubernetes - the opensource container-orchestration system - to guarantee safe and flexible development-deployment processes [16]. Endorsed by many major tech corporations (Intel, Nvidia, others), the platform aims to offer its services not only to big enterprise clients, but also to small business solution providers.

Microsoft Azure AI

has evolved into an intelligence system built right on top of the Microsoft Azure platform, with most of its features being designed to focus on three primary areas: AI apps & agents, Knowledge mining, and Machine learning. An intensively-addressed topic in the context of Microsoft Azure AI is AutoML [17], which allows machine learning to be used for real-world problems, starting from the input dataset to the final result. The declared goal is to enable non-technical end-users to use ML to everyday problems [18], [19].

Amazon Machine Learning

presents a full stack cloudbased solution for machine learning development by providing infrastructure, frameworks, and services for Artificial Intelligence (AI) and Machine Learning (ML). Amazon ML quickens the customer’s business process by allowing them to smoothly integrate machine learning algorithms. It also provides simplistic AI integration with the user’s application and frameworks for deep learning and a comprehensive documentation [20]. It integrates existing frameworks such as TensorFlow, PyTorch, and Apache MXNet, and comes with pre-installed deep learning frameworks. Combining services from different ML stack levels is possible by using workloads.

B. Decentralized Approaches

As a response to the rigidity of centralized AI environments controlled by big corporations, several projects have begun using blockchain as a foundation for new AI services. These new models follow the principles of decentralized autonomous organizations, and empower communities to unite and contribute to a swarm-like ecosystems.

SingularityNET

is a platform where users create, share, and monetize AI services at scale on top of a decentralized economy. All services revolve around a marketplace that connects creators with consumers. Although it plans to become blockchain agnostic, SingularityNET currently runs on Ethereum, and uses a consensus called Proof of Reputation which was derived from Proof of Stake [21]. Aside from the components of the SingularityNET platform, Singularity Studio [22] uses external tools such as inter-AI collaboration framework, OpenCog Artificial General Intelligence engine, and TODA secure decentralized messaging protocol, and many others. Although SingularityNET utilizes a Proof-ofReputation system, the token holders still control the democratic decision-making process.

Effect AI

integrates three components: a marketplace for outsourcing small tasks(Effect.AI Force), an AI registry with algorithms (Effect.AI Smart Market), and an infrastructure layer (Effect.AI Power). Effect AI is building a computational environment to apply AI automation processes on flexible data sources. Its blockchain structure was initially designed for NEO [23], but currently runs on EOS [24] and represents the foundation for interoperability of algorithms and services. Effect AI is focusing on gathering a global network of freelancers to offer on-demand integrations for clients in different industry areas (sentiment analysis, language translation, chatbot training, etc.) [25].

Ocean Protocol

provides the infrastructure to link data providers, data consumers, service providers, marketplaces, and network keepers. The network architecture has 5 components: Frontend, Data Science Tools, Aquarius, Brizo, and Keeper Contracts. The core innovation behind the protocol lies in the decentralized layer which offers Service Execution Agreements (SEAs) as a fundamental method for enabling tracking, rewards, and dispute resolution in a Web3 data supply chain. The Ocean token is at the centre of the economic model, and allows actors to share and monetize data while at the same time ensuring control, auditability, transparency, and compliance [26]. As clearly mentioned in the technical papers, Ocean Protocol is a substrate for AI Data & Services which focuses extensively on the infrastructure and governance of the protocol representing the foundation of all other components.

DeepBrain Chain

was launched as an AI open platform in China, slowly evolving into a global platform which aims to reduce the cost of hardware for AI processing by 70%. DeepBrain decentralizes the neural network operations needed for training AI models. The incentive model relies on mining (processing), and rewards infrastructure providers with DBC tokens. Already-confirmed use-cases in areas such as Driverless Cars, Speech Recognition, and Tumor Detection reinforce the capabilities of the platform to match requests and resources in a privacy-driven environment [27].

Thought Network

promotes a new paradigm: smart logic embedded into every bit of data. The project aims to solve the AI black box problem [28], and is organized into 3 layers: Information, Fabric, and Compute. Across these layers, a patented model called Nuance is a new standard of development which replaces the application logic with a container logic. Several blockchain layers are governing the infrastructure, with each one running multi-level dynamic consensus methods. The current roadmap shows that the project only aims for a functional prototype, but the outcomes of such an approach are valuable for the community of AI [29].

C. Current Challenges

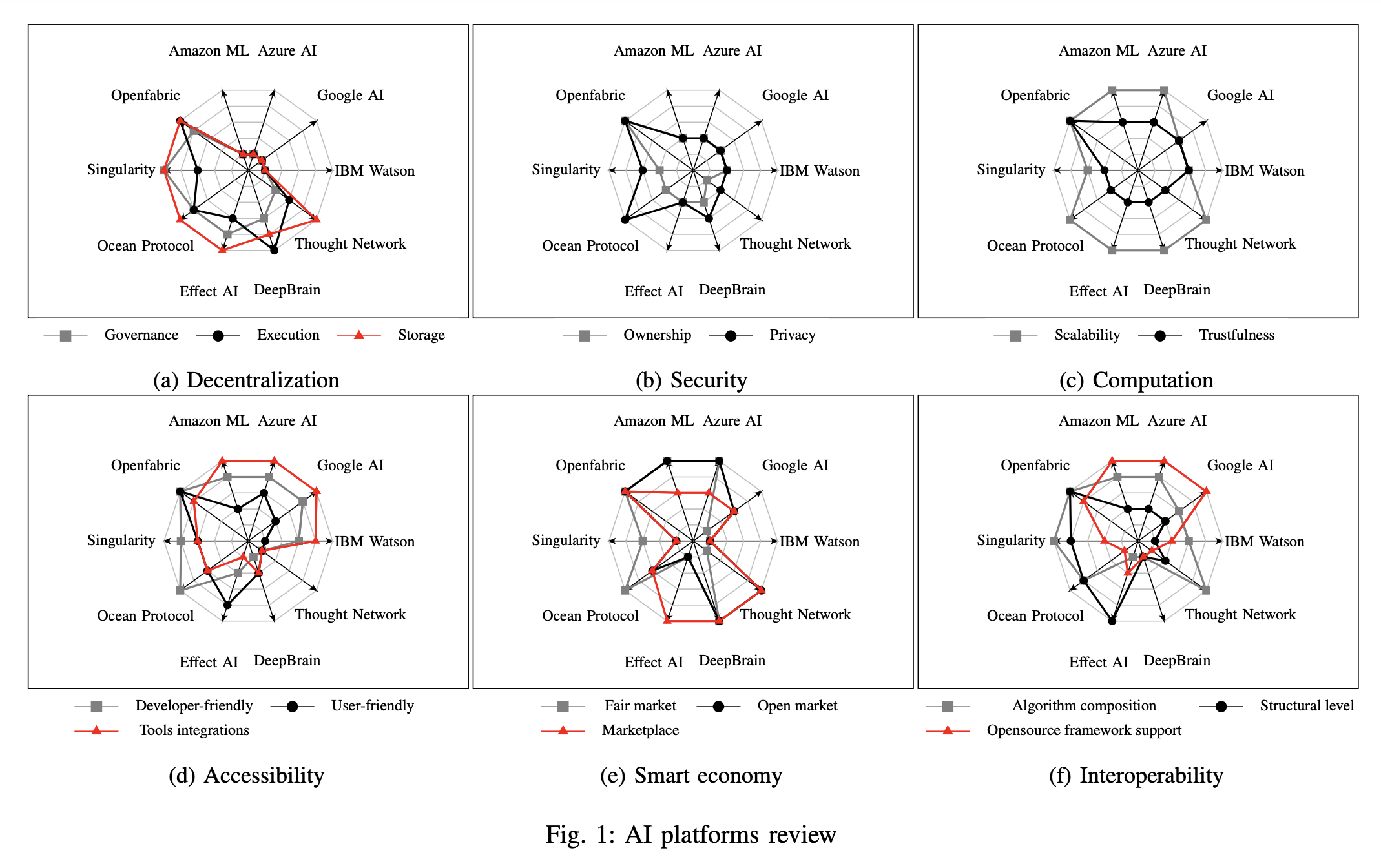

The centralized and decentralized approaches presented above offer a broad viewpoint concerning the current state of the field. The selected platforms represent the starting points on understanding the key ingredients, constraints, and pitfalls towards advancing the domain. Fig. 1 provides a pragmatical and condensed side-by-side visual perspective depicting the critical traits which require attention. All values are collected by researching the official documentation, or by directly testing the solutions. The information is structured to show the main differences between AI ecosystems and the Openfabric approach. Openfabricms to use this model as a guideline to empower community contributions, while keeping the environment safe for seamless business integration.

By surveying the current AI platforms related to the project scope, we have identified several challenges that need to be addressed, in order to be able to take things further:

- Decentralization - no central entity that controls the location of data or information processing;

- Security - protect end-user privacy and guarantee intellectual property rights;

- Interoperability - use of standardized interfaces to allow multiple AI agents to cooperate and connect in providing relevant answers to complex problems;

- Accessibility - simplify the interactions between endusers and AIs by providing straightforward, non-technical flows;

- Smart economy - create a built-in robust exchange medium that facilitates fair transactions between supplyand-demand of AI services;

- Computation - expand network capabilities by allowing network participants to rent their computing power for execution and training of AIs;

- Datastreams - provide mechanisms to distribute and consume vast amounts of data for training and execution.

Fig. 1: AI Platforms review

D. Conclusions and Next Step Forward

After a complete overview of the domain (Sections A, B) and side-by-side technical comparisons identifying the challenges (Section C), the path towards the Internet of AI seems to be guided by two main conclusions:

- The centralized platforms are secure and reliable, but their components are private, the information is siloed, and the ecosystem is divided among the few big players.

- The decentralized platforms are slowly evolving into mature products with many use cases and relevant business models, but the need for standardization, flexibility, and open community has to be more present in order to produce a larger impact wave. In this context, Openfabric is learning from existing approaches by using the positive outcomes from both worlds (centralized and decentralized) to move things closer to the genuine concept of the Internet of AI that can provide the long-awaited visible progress.